Consider the excitement you feel when watching a movie mystery or reading a suspenseful book. At every unexpected turn, the adrenalin rushes and your mind thrills as you eagerly anticipate what might happen next. Our brains simply love predicting and reacting to unexpected twists and turns!

In the world of music, something similar happens when we subconsciously predict what notes will come next in a melody or harmony. And the satisfaction we get when those predictions are met (or sometimes broken) is a big part of why we enjoy music so much.

This is why it’s so important to train AI systems to understand what combination of musical events lead to anticipation and surprise. It allows them to recognize and create more interesting music and predict our emotional reactions with far greater accuracy.

Computer Analysis of Musical Expectation

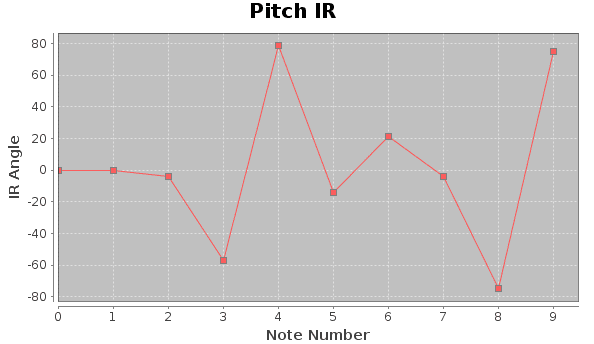

Over the past several decades I’ve developed software designed to study the science of musical anticipation and help us understand how our brains want melodies to unfold. A key element of this process is a vector I call “Pitch IR” that can be plotted to show how much we expect certain musical notes to pop up at specific times. Here’s how it works…

Let’s take a melody – like this tune form Chopin’s Etude Op.10 No.6 in E-flat minor:

As each note in the melody passes, your brain has a private conversation with itself based on a stream of constant predictions… “What will the note next sound like? Was the last note what I expected? Wow! That one was a shock… I hope the next note is more predictable… Ah… that’s better. But that surprise sure was fun! Maybe it’ll happen again?”

My “Pitch IR” vector measures this ongoing internal dialog within music and can map out the emotional journey. Here’s what the first 9 notes of the IR Pitch Vector looks like for the Chopin melody above. (The higher the value, the greater the expectation the melody feels to us.) See if it matches your feelings as you listen.

With this in hand, it’s possible to search catalogs of music to find similar patterns of note expectation. Not similar melodies… but similar patterns of expectation. In other words, the software can discover a critical aspect of the music’s emotional effect over time.

And in this case, the algorithm found a similar expectation journey in opening tune from Beethoven’s Op.7 Sonata in E-flat major.

While they don’t sound the same, the computer connects the two pieces of music through an identifiable aspect of the music’s emotional effect. The two melodies share a similar emotional journey – like two characters in different novels that face different situations but follow a common path.

Even though these melodies have different musical features, their expectation patterns are alike, and understanding these patterns is a crucial step to making computers more musically creative. Now let’s shift to the world of opera, where music and storytelling combine.

Discovering Emotional Journeys With AI

In Monteverdi’s opera “L’incoronazione di Poppea,” the story culminates in a deeply emotional expression of love between, Nero and Poppea. The text, “Pur ti miro, pur ti godo” (I gaze at you, I possess you), says it all and the ending is sublime… You can hear it below.

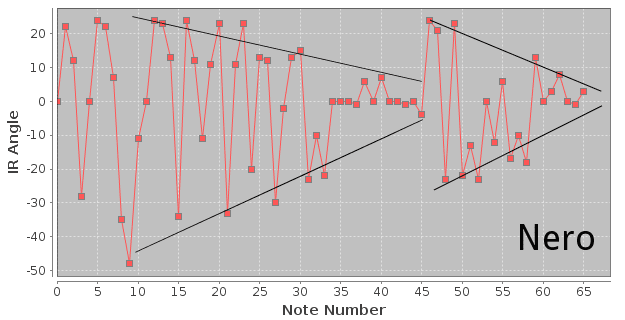

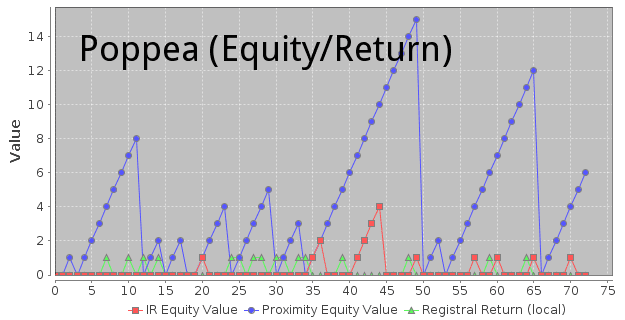

And guess what? If we ask the algorithm to analyze the individual melodies sung by Nero and Poppea, we find they share a similar pattern of musical expectation! A similar journey of tension and release. In fact, when graphed, the expectation patterns in this duet look a bit like a pendulum swinging back and forth. They each start out exhibiting a wide range of emotion but eventually settle into a stable rhythm and come together to symbolize their emotional union.

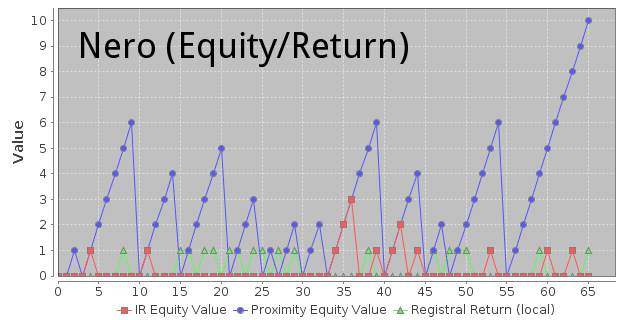

Furthermore, Nero’s melody seems to guide them toward this emotional connection. Nero begins settling into the expected rhythm earlier than Poppea – an effect we can measure through something I call “Pitch Equity Parameters” that describe how stable a melody is (or isn’t) over time.

Although Poppea’s melody contains ~10% more notes, Nero’s tune suggests stability more often through more frequent local registral return events. And while the voices contain an equal number of IR (expectation) equity events, Nero’s consistently occur before Poppea’s as if to subtly urge her toward equilibrium/unification in the final cadence.

It’s as if Nero is gently nudging Poppea to find a musical expression of balance at the end of the duet, creating a satisfying musical unity. And this entire emotional journey can be measured and understood through intelligent computer analysis!

This is just a brief glimpse into the realm of codifying musical tension and release, but I hope it provides some insight into how it’s possible for intelligent music algorithms to discover and compare emotional journeys. In the quest to enable AI systems to generate more interesting and engaging music, we must learn to code the enduring patterns that make music universally gratifying. After all, our ultimate goal is to ensure the output of AI systems resonate with listeners and pave the way for future artistic innovation.