We all love music because of how it makes us feel, and while it may not seem immediately natural, music can be thought of as information to be analyzed and represented visually or mathematically. Music theorists have been doing this for centuries; breaking music into its smallest elements to make sense of its underlying structure and patterns, but analyzing music with AI poses slightly different challenges.

Before we dive in, let’s look at reasons we might want to reduce music to discrete bits of data in the first place:

- To share it with others by writing it down.

- For analytical purposes, such as learning how great music works.

- To enable computers to process and manipulate musical language.

Writing music down seems straightforward enough. After all, if someone wants to communicate a bunch of complex sounds to other people they’ll either need to demonstrate it directly or find a way to put it on paper. But as we’ll see, the process of reducing the rich experience of music to dots on paper isn’t as simple as jotting down a grocery list or even writing down your personal thoughts about last night’s dreams.

Music Notation: A Brief History

The history of Western music notation traces back to the 9th century with the advent of Gregorian chant. Why? Well, the Catholic church had a problem – religious practitioners across the expanding world were singing wildly different versions of the sacred chant melodies. Blaspheme! Time to write them down. The church developed a simple spatial system called ‘neumes’ to represent music in writing, but it left a lot to be desired. In fact, early neumes only indicated the general shape of a melody and didn’t communicate the precise notes or rhythms to be sung.

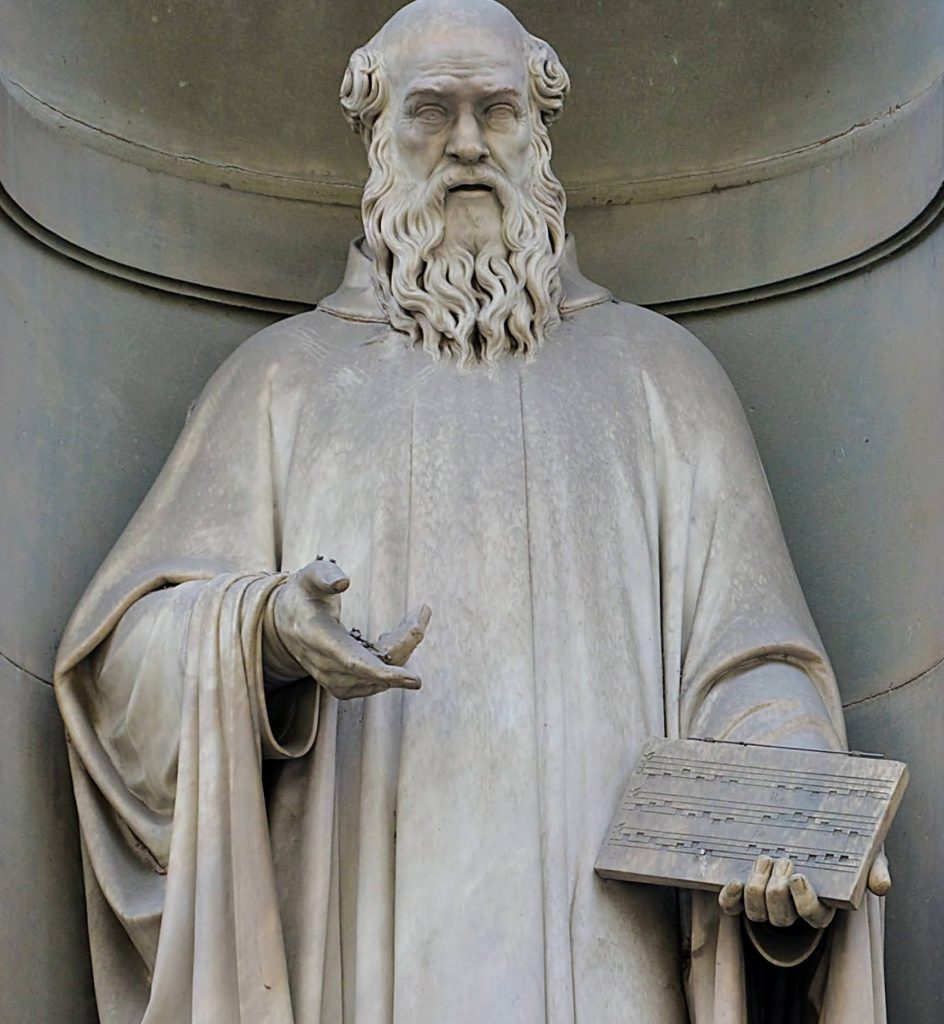

By the 12th century, Guido of Arezzo introduced improvements like the musical staff and a more comprehensive notation for ensembles which paved the way for further advancements in rhythmic notation in the early Renaissance. Ultimately this work forged a path for the creation of scores, where multiple parts could be written down in a standard way, making it easier to perform and transport complex music. And while we have access to many scores from these early years, not all aspects of the actual sounds are notated, so exactly how people performed and expressed this music is subject to ongoing debate.

As notational systems matured to include the variations in dynamics and phrasing that became increasingly important in classical and romantic music, they were still missing essential information. By the 20th century, compositions called for even more complex sounds that often required special symbols to indicate unusual timbral effects through the use of extended performance techniques. And with the advent of purely electronic music in the 1950s, composers resorted to drawing pictorial graphs and documenting methodology in lists of detailed instructions.

Feeling ALL the Feels

Even with all of the workarounds mentioned above, we find advanced notation still fails to adequately capture the personality of individual musicians. With every performance, musicians put their unique personality on display. One could say that a musician’s identity is the difference between a notated score and the actual sound of their performance that includes tendencies of variations in micro-timing, dynamics, and timbre along with other unique stylistic choices.

Computers struggle to capture these other subtle nuances that give ‘life’ and ‘feel’ to human performances. These personality-driven subtleties are in many ways at the core of what we love about human-generated music so it is essential to codify them in the data we feed to intelligent music systems. And as we’ve discussed, many of these nuances aren’t accounted for in symbolic notation. So in order to succeed, we must build algorithms that seek out and capture these nuances in audio recordings and quantify the analysis results accurately for algorithm training.

The Challenge of Music and LLMs

Another reason to dissect musical language is to understand how a piece of music works. In this case, we’re looking to find clear and efficient ways of examining some of the not-so-obvious patterns and inner structures. Today’s LLMs (and other pretrained transformer algorithms) do very well tokenizing written language and demonstrating a high-level abstraction of grammar. Simply by predicting the next likely token in a series they are capable of handling complex inferences, language translation, and even humor.

But unlike spoken language, the structure of music is best described as having an ‘emergent grammar’. Every new piece of music can establish its own rules and expectations, making the creation of a universal system for understanding and generating music a truly different kind of challenge. Additionally, cultural norms and individual preferences can play a significant role in shaping how music is composed, performed, and perceived.

Making Music FOR Machines

To do their magic LLMs first divide blocks of text into groups of words (or tokens) for processing. However with music, there are no ‘words’. In fact, there are no obvious ‘blocks’ of information at all. Somehow we must create musical tokens such that they retain their musical identity and meaning. This can be done through a process we call segmentation.

Because music doesn’t have a universally agreed upon syntax and every new piece of music can establish its own rules and expectations, the segmentation process needs to be flexible and very carefully implemented. Many current approaches to segmentation for LLM processing simply apply a fixed time ‘slice’ when tokenizing music files. What’s wrong with that? Well, I mean… a stopped clock is right two times a day, so perhaps 20ms or 2s or 20s chunks of music will provide meaningful analysis data? But I wouldn’t bet on it.

Much of my own work (and patent) deals with the challenge of segmentation because it is at the heart of what’s needed to create meaningful music data. And other researchers are starting to uncover certain universals we can apply to segmentation when preparing it for intelligent AI systems.

Are there ways to break music down that reveal how it makes us feel? And what does computer analysis uncover that traditional techniques miss?

Music is Not Language… It’s MUSIC!

Although you probably don’t need further convincing, a recent paper authored by 10 top research scientists from MIT, Columbia, Rice, University of California, Carleton University, Harvard, and UCL London surveyed a mountain of existing scholarship, conducted three fMRI experiments and a behavioral study, all to definitively conclude that music cognition and understanding happens outside of the language systems in our brains. Here’s an excerpt from the abstract:

… we obtained a clear answer: music does not recruit nor requires the language system. The language regions’ responses to music are generally low and never exceed responses elicited by non-music auditory conditions, like animal sounds. Further, the language regions are not sensitive to music structure: they show low responses to both intact and scrambled music, and to melodies with vs. without structural violations.

Pretty clear, no? This means that the vanilla application of text-driven language AI strategies will most likely require some music-centric approaches in order to produce the best and most useful results. To quote a not-so-famous character from rock history…

Information is not knowledge, knowledge is not wisdom, wisdom is not truth, truth is not beauty, beauty is not love, love is not music, music is the best!

– Girl from the bus

And I think we can add… Music is not language.

So let’s continue to explore other ways to analyze it.